Junbo Li

I’m currently a visiting student at Sailing-MBZUAI Lab working with Prof. Eric Xing. I’m broadly interested in making AI efficient and reliable from a machine learning and optimization perspective, striking a balance between theoretical understanding and practical application to address large-scale real-world challenges. My most recent research experiences and interests lie in every aspect of large language models, including pre-training, fine-tuning, and inference. My works include publications on conferences such as ICLR[1], NeurIPS[2, 3] and ECCV[4], as well as large open-source projects like LLM360.

Previously, I completed my master’s degree in computer science at UC Santa Cruz in June 2023, focusing on machine learning and computer vision. In 2023, I also collaborated with Prof. Ruqi Zhang on efficient training of Bayesian neural networks. Earlier, I got my bachelor’s degree in mathematics and applied mathematics at Fudan University in June 2021. During which, I worked on reinforcement learning theory with Prof. Zhaoran Wang and Zhuoran Yang.

news

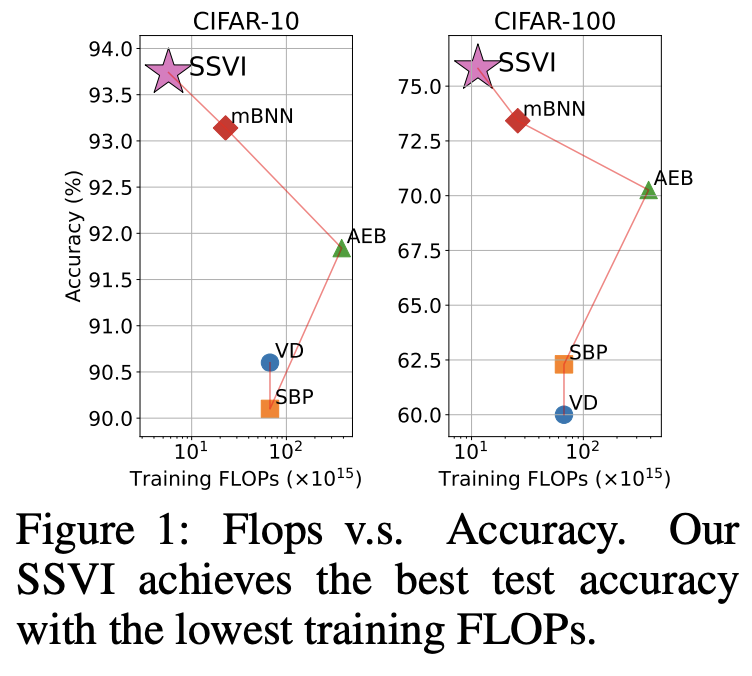

| Jan 16, 2024 | My first-author paper Sparse Subspace Variational Inference is accepted by ICLR 2024. |

|---|---|

| Dec 14, 2023 | We release LLM360, including two 7B base models training from scratch: Amber and CrystalCoder, as well as the fine-tuned versions: AmberChat, AmberSafe, and CrystalChat. Our CrystalChat outperforms both Llama 2 and CodeLlama on both English and code benchmarks. |

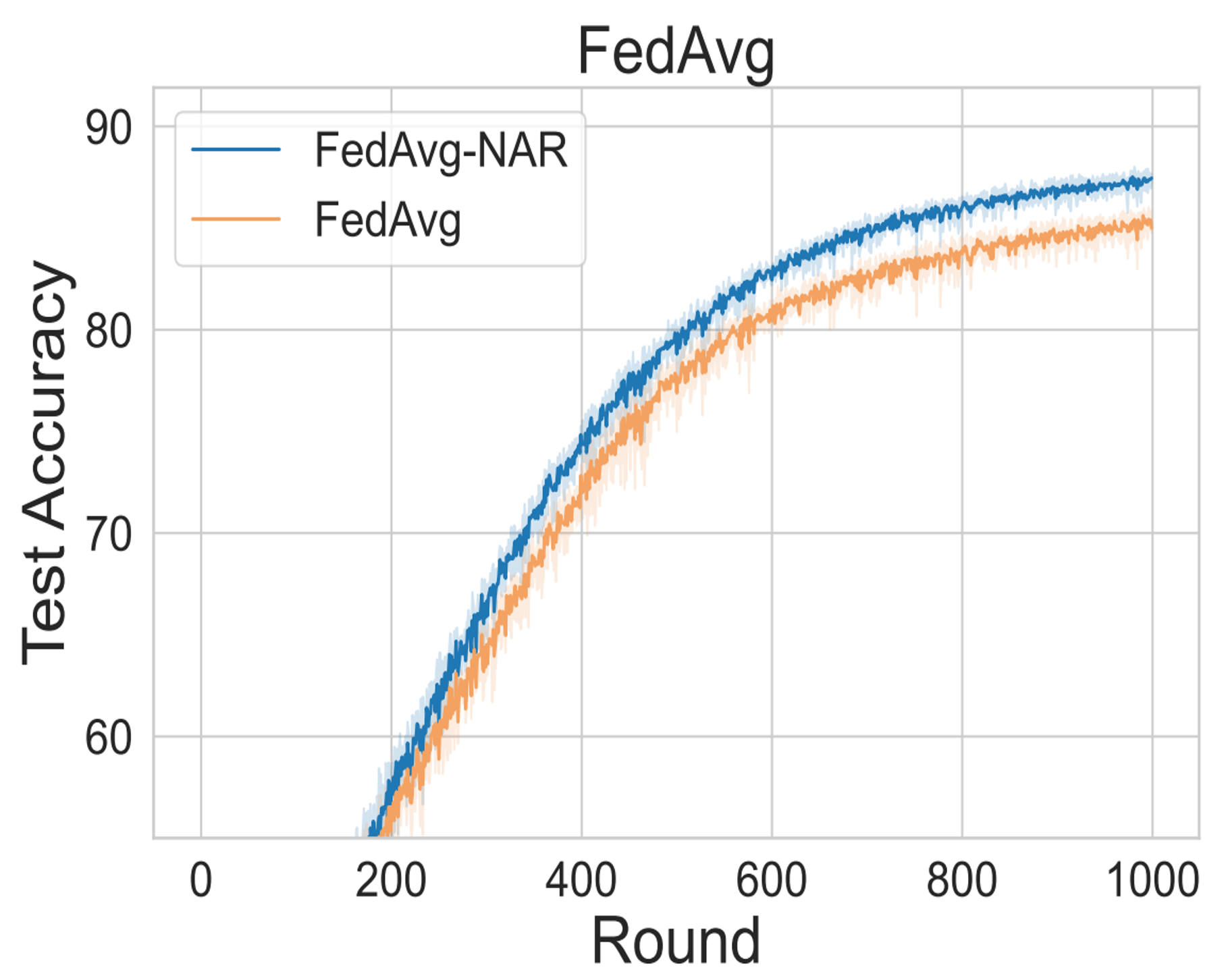

| Sep 21, 2023 | My first-author paper FedNAR is accepted by NeurIPS 2023, and is featured in the recent spotlight track at CPAL 2024. |

| Jun 27, 2023 | Glad to join Sailing-MBZUAI lab as a visiting student. |

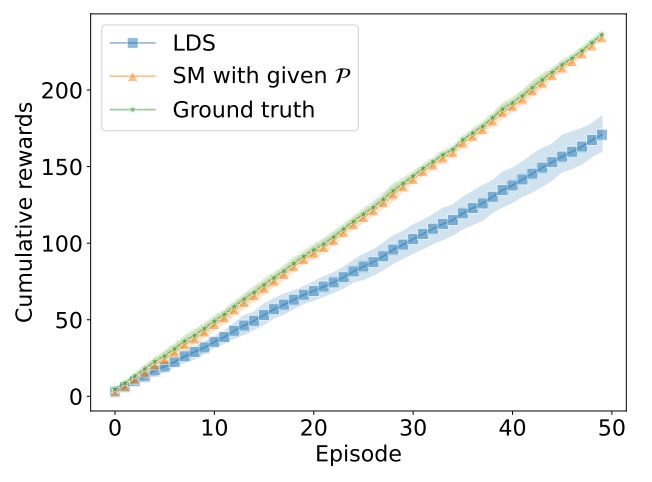

| Sep 14, 2022 | My co-first-author paper Score Matching for RL is accepted by NeurIPS 2022 as the oral presentation. |

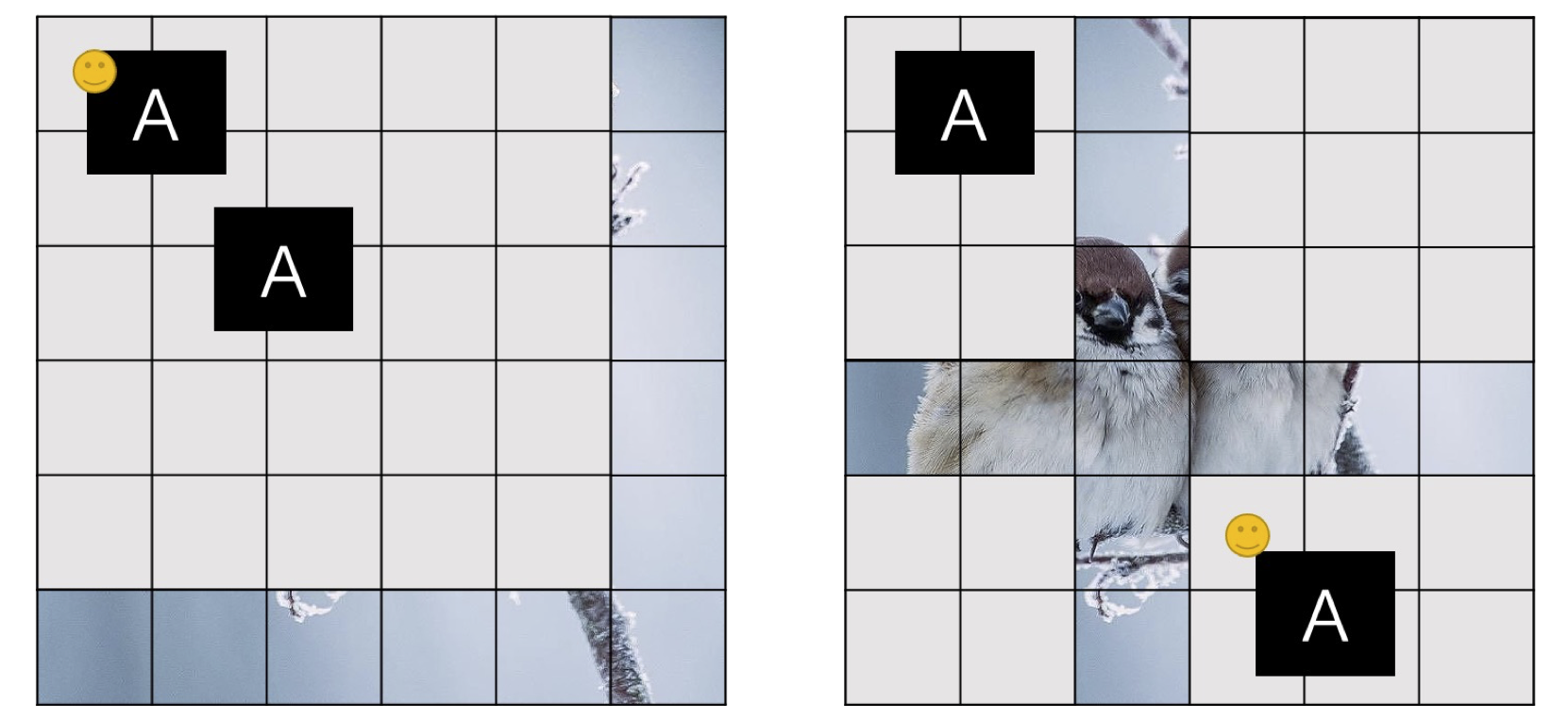

| Jul 08, 2022 | My first-author paper ViP is accepted by ECCV 2022. |