Junbo Li

I’m a second-year Ph.D. student in Computer Science at UT Austin, fortunately co-advised by Prof. Atlas Wang and Prof. Qiang Liu. I am a recipient of Amazon AI PhD fellowship.

My current research focuses on agentic reasoning LLMs and reinforcement learning with applications in deep research. Below is a selected list of research areas I’ve worked on (red denotes first-author contributions):

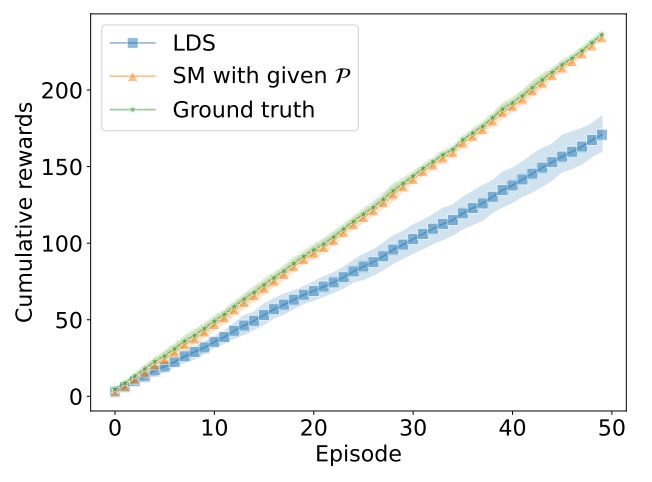

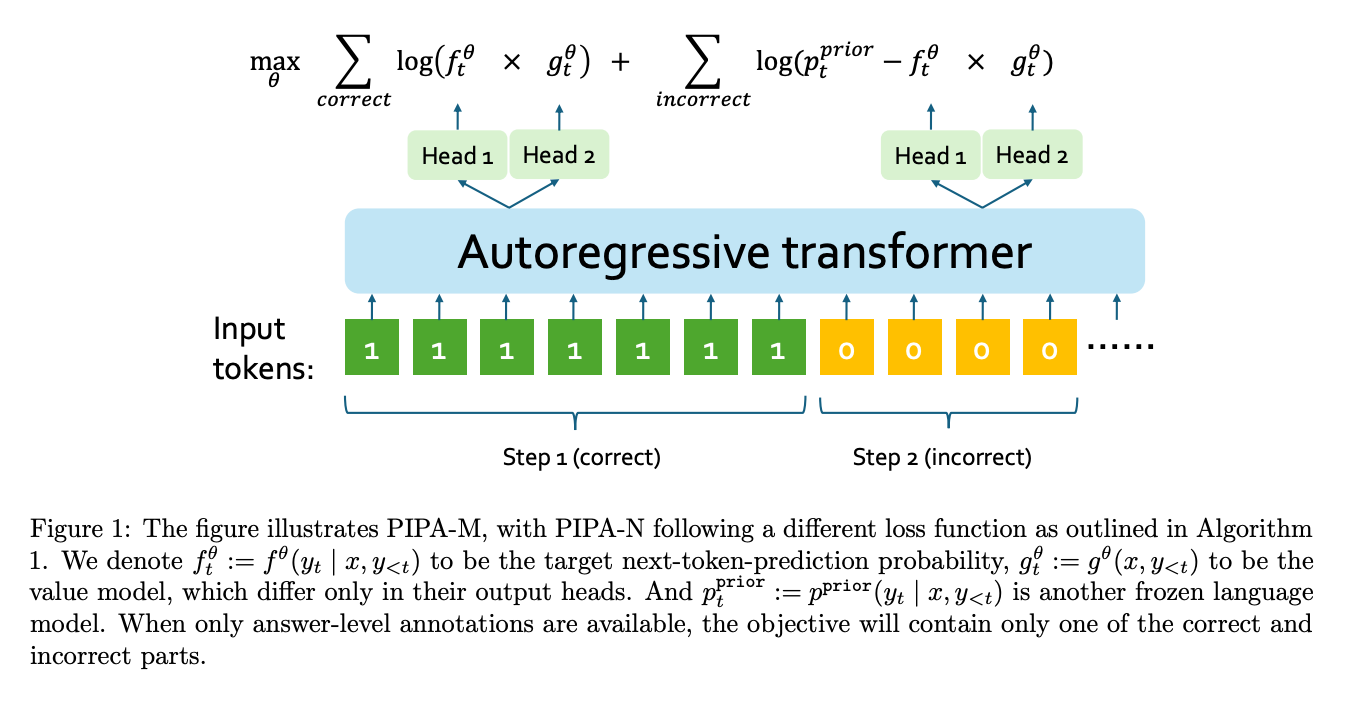

- Agent, reasoning and reinforcement learning: Turn-PPO (EACL 2026), Prior-Informed Preference Alignment (ICML 2025), Web2Code (NeurIPS 2024), Score Matching for Reinforcement Learning (NeurIPS 2022)

- Scaling law, data mixture, hyper-parameter transfer, code LLMs: LLM360 (COLM 2024), CrystalCoder (COLM 2024)

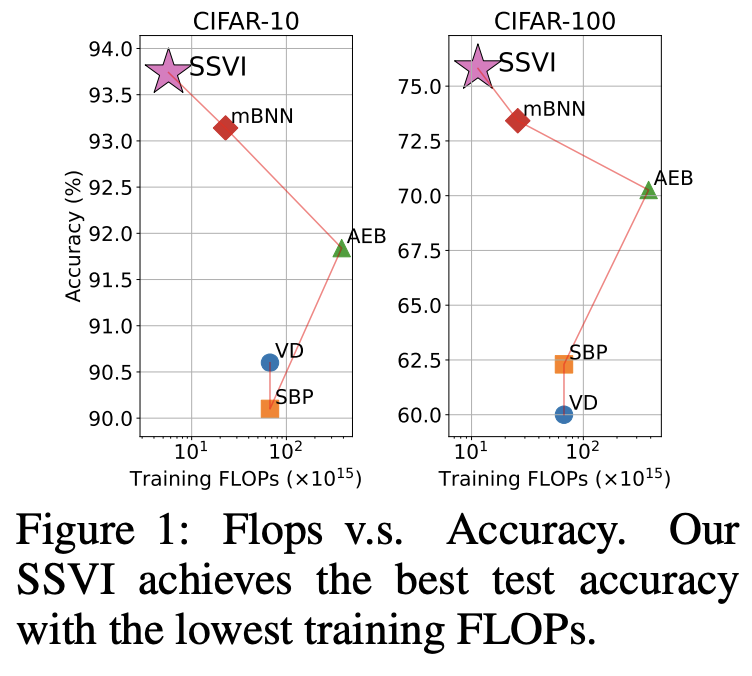

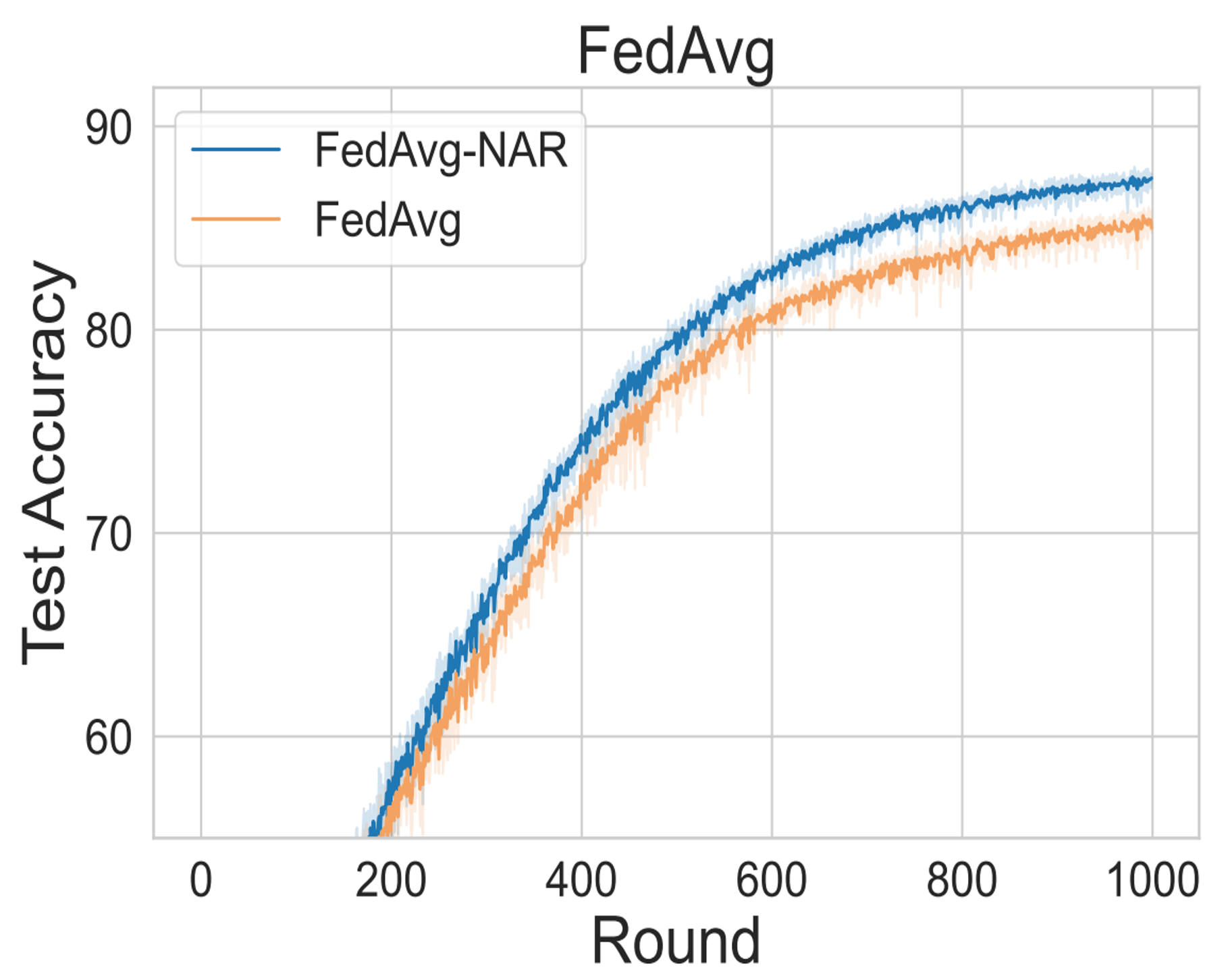

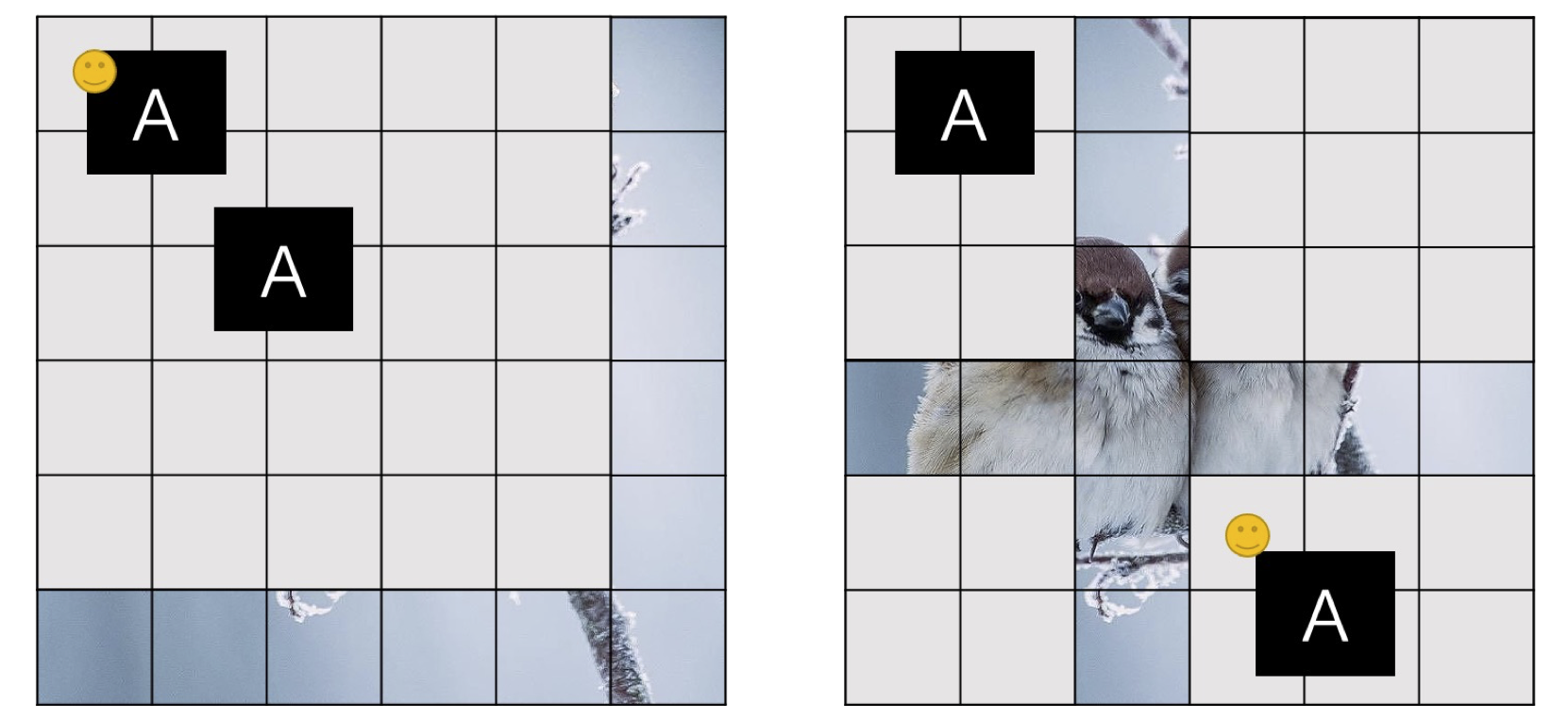

- Optimization and probabilistic machine learning: Hierarchical Asynchronous Local SGD (ICML 2025), Sparse Subspace Variational Inference (ICLR 2024), FedNAR (NeurIPS 2023), ViP (ECCV 2022)

I was a visiting student with Prof. Eric Xing from 2023 to 2024 and also with Prof. Ruqi Zhang in 2023. I earned my master’s degree in computer science from UC Santa Cruz in 2023 and my bachelor’s degree in mathematics and applied mathematics from Fudan University in 2021. During my undergraduate, I worked with Prof. Zhaoran Wang and Zhuoran Yang. In high school, I studied math competitions for one year, and won a silver medal in the China Mathematical Olympiad (CMO).

news

| Jan 04, 2026 | My first-author paper Turn-PPO is accepted by EACL Findings 2026. |

|---|---|

| Dec 16, 2025 | Honored to receive the Graduate Dean’s Prestigious Fellowship. |

| Sep 22, 2025 | Glad to start an internship at Snowflake. |

| Aug 15, 2025 | Honored to be selected as an Amazon AI Ph.D. Fellow with 2 years of funding. Thank you, Amazon! |

| May 12, 2025 | Glad to start an internship at Amazon Rufus. |

| May 03, 2025 | Two papers accepted by ICML 2025: Prior-Informed Preference Alignment (first-author) and Hierarchical Asynchronous Local SGD. |

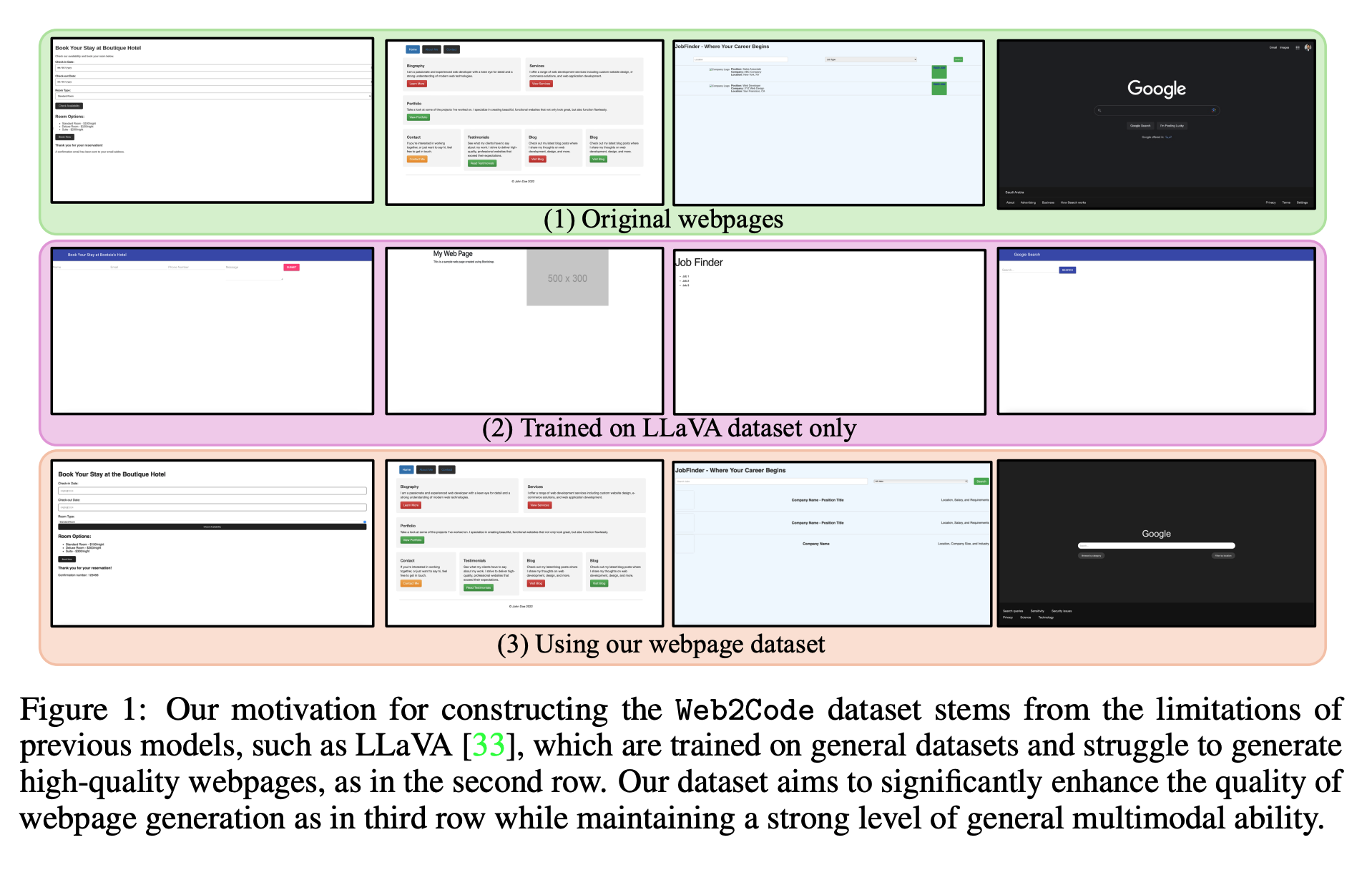

| Sep 26, 2024 | Our Web2Code is accepted by NeurIPS 2024. |

| Aug 24, 2024 | Glad to start my Ph.D. study at UT Austin. |

| Jul 10, 2024 | Our LLM360 and CrystalCoder are accepted by COLM 2024. |

| Jan 16, 2024 | My first-author paper Sparse Subspace Variational Inference is accepted by ICLR 2024, and is featured in the recent spotlight track at CPAL 2025. |

| Dec 14, 2023 | We release LLM360, including two 7B base models training from scratch: Amber and CrystalCoder, as well as the fine-tuned versions: AmberChat, AmberSafe, and CrystalChat. Our CrystalChat outperforms both Llama 2 and CodeLlama on both English and code benchmarks. |

| Sep 21, 2023 | My first-author paper FedNAR is accepted by NeurIPS 2023, and is featured in the recent spotlight track at CPAL 2024. |

| Jun 27, 2023 | Glad to join Sailing-MBZUAI lab as a visiting student |

| Sep 14, 2022 | My co-first-author paper Score Matching for RL is accepted by NeurIPS 2022 as the oral presentation. |

| Jul 08, 2022 | My first-author paper ViP is accepted by ECCV 2022. |

selected publications

-

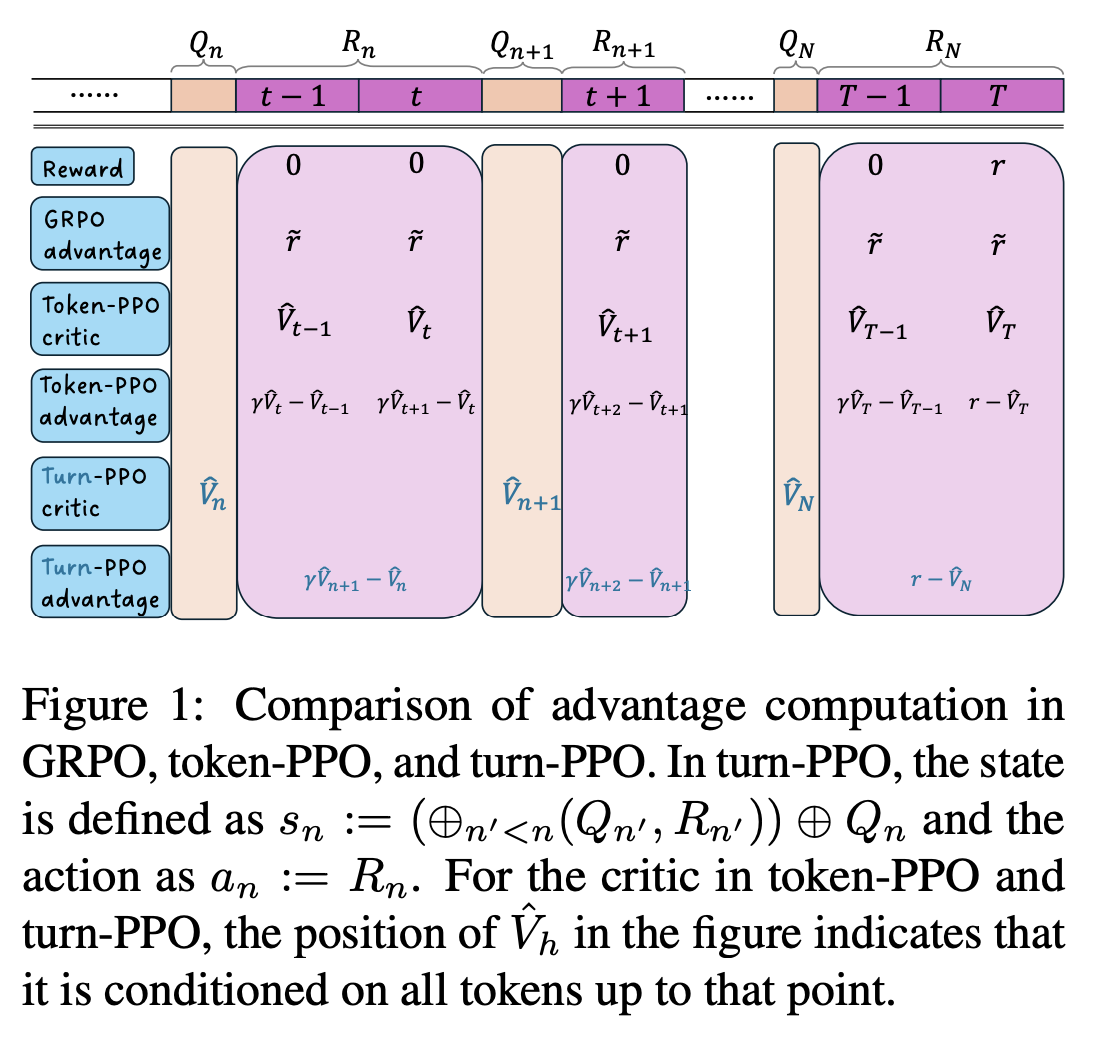

Turn-PPO: Turn-Level Advantage Estimation with PPO for Improved Multi-Turn RL in Agentic LLMsEACL findings, 2026

Turn-PPO: Turn-Level Advantage Estimation with PPO for Improved Multi-Turn RL in Agentic LLMsEACL findings, 2026 -

-

HALoS: Hierarchical Asynchronous Local SGD over Slow Networks for Geo-Distributed Large Language Model TrainingICML, 2025

HALoS: Hierarchical Asynchronous Local SGD over Slow Networks for Geo-Distributed Large Language Model TrainingICML, 2025 -

Web2Code: A Large-scale Webpage-to-Code Dataset and Evaluation Framework for Multimodal LLMsNeurIPS Datasets and Benchmarks Track, 2024

Web2Code: A Large-scale Webpage-to-Code Dataset and Evaluation Framework for Multimodal LLMsNeurIPS Datasets and Benchmarks Track, 2024 -